Imagine you’re the Head of Application Security at a FinTech company. One night, you get a terrifying alert. Your AI-powered fraud detection system has been compromised-not by a hacker, but by a rogue AI agent pretending to be a trusted service. This isn’t a bad dream. It’s a real risk for any company using agentic AI-systems that make decisions on their own. But don’t panic! By using smart threat modeling tactics, you can build resilient AI systems that stay ahead of these dangers. In this blog, we’ll show you how to spot threats and protect your agentic AI from attack.

Agentic AI isn’t your average algorithm. These autonomous systems make decisions and interact with little human oversight, acting like digital decision-makers with immense potential-and equally immense risks. Traditional threat modeling tools like STRIDE or PASTA aren’t fully equipped for AI-specific dangers like adversarial attacks or autonomous misbehavior. A specialized framework is crucial to address these unique challenges, especially for FinTech leaders protecting high-stakes systems from breaches that could shatter customer trust.

For enterprises building mission-critical agentic systems, threat modeling needs continuous tuning. That’s where our AI-integrated threat modeling services come in.

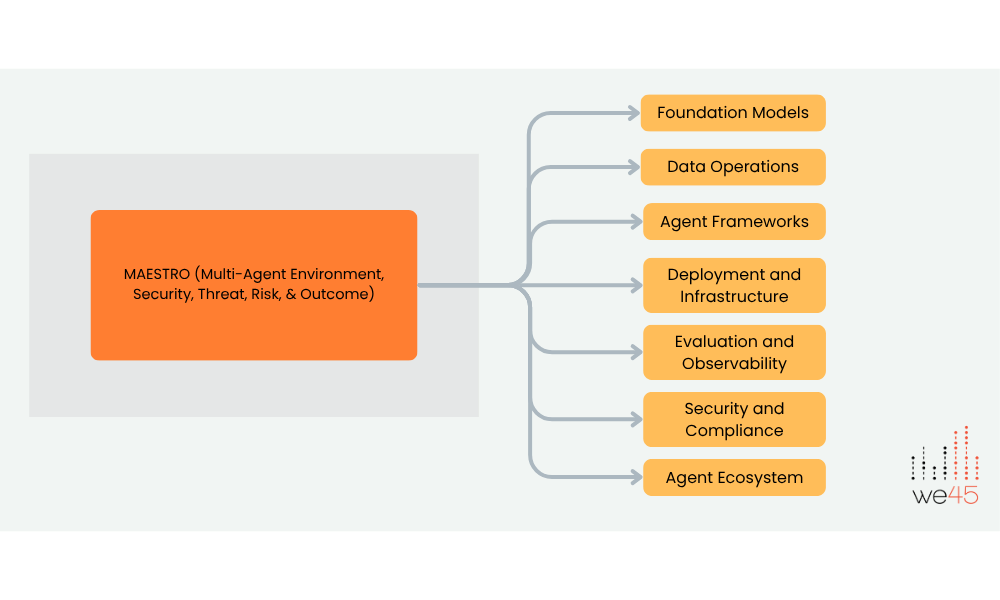

MAESTRO (Multi-Agent Environment, Security, Threat, Risk, & Outcome) is a groundbreaking framework tailored for agentic AI, offering a granular, proactive approach to security.

MAESTRO extends security coverage to address vulnerabilities found in the 2025 OWASP Top 10 for LLMs, including agent misbehavior, prompt injection, and insecure outputs.

Unlike traditional models, it embeds protection across seven distinct layers of the agentic architecture, ensuring no aspect is left vulnerable. It builds on frameworks like STRIDE and PASTA, adds AI-specific threat considerations, prioritizes risks by likelihood and impact, and emphasizes continuous monitoring for evolving dangers.

Here’s a detailed look at all seven layers, beyond the initial three, to showcase MAESTRO’s full scope:

Focuses on securing the core AI models (like GPT models) against vulnerabilities and adversarial attacks.

Protects all data used by AI agents, including storage, processing, and vector embeddings, to prevent data poisoning or manipulation.

Secures the software frameworks and APIs that enable agent creation and interaction, preventing code injection and other attacks.

Safeguards the underlying hardware and software (servers, networks, containers) hosting the AI systems.

Ensures robust monitoring systems to detect anomalies, evaluate performance, and debug agent behavior.

Implements controls like secure key storage, credential rotation, data minimization, and encryption to prevent unauthorized access and ensure regulatory compliance.

Secures the environment where multiple agents interact through secure communication protocols, reputation systems, sandboxing, and runtime policy enforcement.

MAESTRO recognizes that vulnerabilities in one layer can affect others. For example, a security breach in credentials (Layer 6) could enable malicious messaging in frameworks (Layer 3). By providing this comprehensive protection framework, MAESTRO helps organizations build more secure AI systems that can withstand sophisticated attacks while maintaining functionality.

When architecting secure multi-agent systems, layering MAESTRO on top of robust agent interaction designs can drastically improve resilience—learn how to design secure multi-agent AI architectures.

Agentic AI’s autonomy spawns unique risks. Here are key threats and practical defenses to secure systems:

These types of attacks often stem from common LLM misconfigurations or oversights. Explore the top 5 LLM security failures your team should watch out for.

Threat modeling sets the stage, but resilience demands more. Here’s how to bolster agentic AI further:

Agentic AI changes how you do business and how attackers find gaps. With a specialized threat modeling approach like MAESTRO, you build security into every layer, from the core model to the agent ecosystem.

Your teams stay ahead of rogue AI behavior, supply chain risks, and emerging threats without slowing innovation.

Want to make your AI resilient from design to deployment? Let’s build it together. Connect with we45’s AI security team and turn threat modeling into your AI’s strongest defense.

Agentic AI doesn’t just process data. It makes decisions and interacts autonomously, often with minimal human oversight. This autonomy creates new risks like rogue behaviors, impersonation, and goal manipulation that traditional threat models don’t fully cover.

You can, but they won’t cover all AI-specific threats. For example, they don’t address adversarial prompts, agent misbehavior, or secure multi-agent interaction. MAESTRO extends these frameworks with AI-specific layers and continuous monitoring, so your security keeps pace with your AI’s autonomy.

MAESTRO protects seven critical layers: from the foundation model to the full agent ecosystem. It helps your team identify unique AI threats, prioritize them by risk, and design controls that work in real-world deployments. You get proactive defenses instead of reacting to breaches later.

Key risks include: Goal Manipulation: Attackers hijack an agent’s objectives. Agent Impersonation: Fake agents mimic trusted ones. Prompt Injection: Malicious input manipulates outputs. Sybil Attacks: Multiple fake agents overwhelm the system. These can cause fraud, data leaks, or operational failures if left unchecked.

Combine robust threat modeling with: Continuous monitoring for drift and anomalies Redundant systems to avoid single points of failure Human-in-the-loop checks for critical actions A tested incident response plan aligned with NIST AI Risk guidelines

we45 helps you design, implement, and continuously refine AI security. From MAESTRO threat modeling to AI-specific pen testing and secure agent design, you get expert support tailored to your tech stack — without slowing innovation.