Modern digital operations rely heavily on massive data stores. While transactional data often resides in structured databases, vast volumes of unstructured information,such as media files, JavaScript assets, logs, and backups,are routinely managed within high-scale cloud object storage solutions. Azure Blob Storage serves as a cornerstone for this massive cloud ecosystem, optimized for availability, scale, and performance.

To facilitate application efficiency, developers frequently employ Azure Shared Access Signature (SAS) tokens. These tokens are essential security mechanisms, designed to grant temporary, delegated access to specific storage resources without exposing high-value, long-term credentials like the Storage Account Access Keys.

However, the analysis demonstrates that the misuse of SAS tokens,specifically through the creation of overly permissive, long-lived tokens,creates a critical, yet often overlooked, vulnerability. When such a token is inadvertently exposed (in code repositories, logs, or network traffic), it acts as a persistent backdoor, granting unauthorized actors rights functionally equivalent to the full storage account key. This exposure highlights a fundamental conflict: the tension between rapid development velocity, which often favors convenience and maximum permissions, and the foundational Zero Trust security principle that mandates least privilege.

Modern applications generate huge volumes of unstructured data that traditional relational database architectures cannot efficiently scale to accommodate. Azure Blob Storage is Microsoft's object storage solution, purpose-built for this environment. It provides highly scalable, secure, and available storage that serves as the foundation for powerful cloud-native applications, serverless architectures, and advanced services such as Azure Data Lake Storage for big data analytics.

A storage account organizes data into containers, which, in turn, hold various types of binary large objects (blobs). These can include standard Block Blobs (for text and binary data, up to 190.7 TiB, Append Blobs (optimized for logging), and Page Blobs (used for Azure virtual machine VHD files). The systemic value of Blob Storage is high; it is the repository not only for public web assets but also for sensitive internal components like machine learning training data, internal system backups, and confidential application logs. If a token intended for a single public image is compromised, the threat actor gains potential access to these systemic organizational assets, confirming that storage compromise has deep implications.

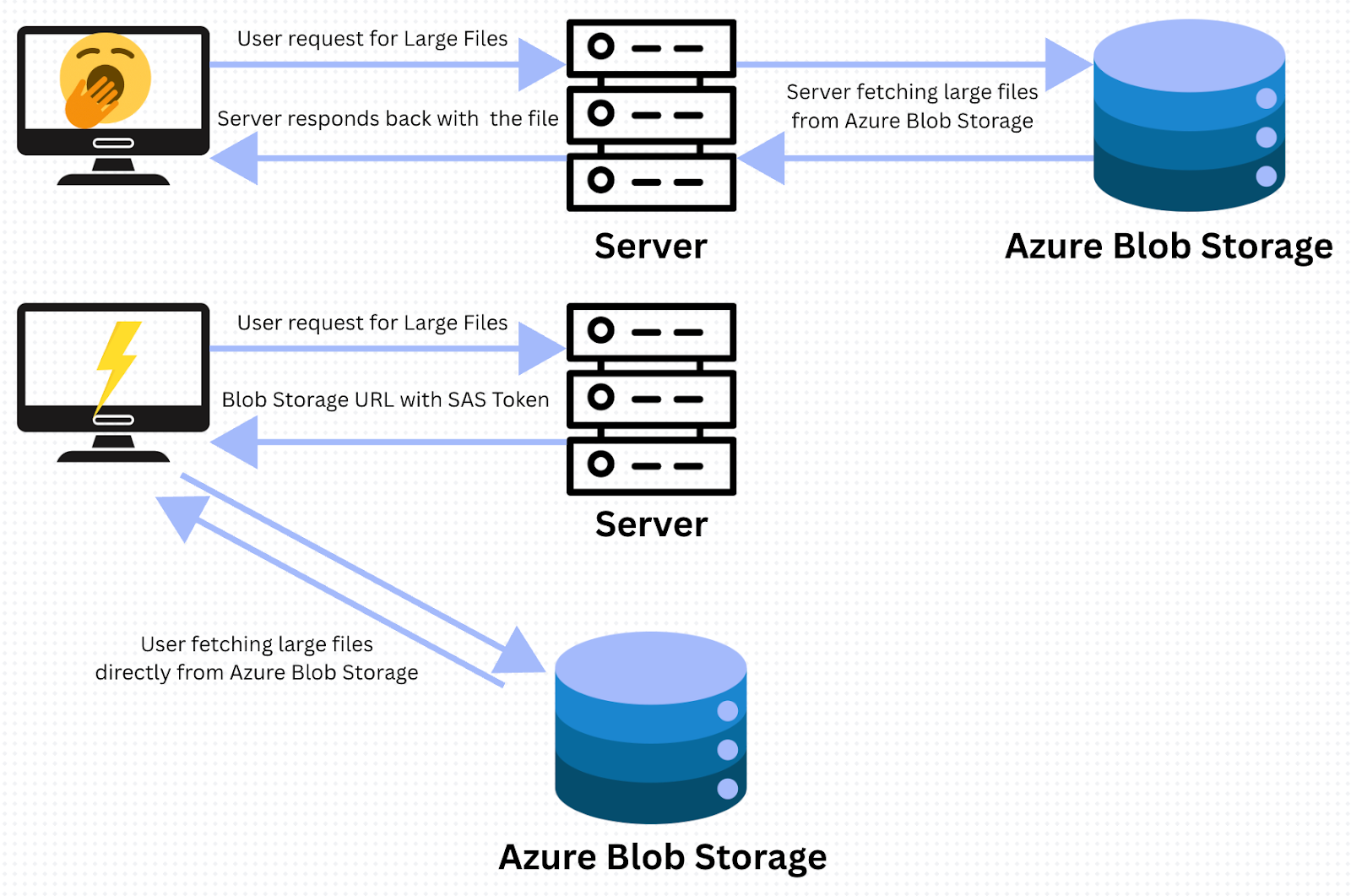

Client applications, such as web browsers fetching frontend assets or mobile applications downloading updates, must access objects in Blob Storage directly via HTTP/HTTPS endpoints.

Relying on the Storage Account Access Keys for client-side access is prohibited by security best practices. These keys are considered the master keys as they grant unrestricted and full administrative access to the storage account data, including the critical ability to generate new SAS tokens. Protecting these keys (ideally by storing them securely in Azure Key Vault) is important as an alternative mechanism for temporary delegation of access: the SAS token.

A Shared Access Signature (SAS) token is a signed Uniform Resource Identifier (URI) that provides delegated access to Azure Storage resources. These tokens are appended as query strings to the resource URL and must be protected with the same vigilance as an account key, transmitted exclusively over HTTPS.

Azure supports several types of SAS tokens, each with distinct scopes and security mechanisms:

The security posture of a SAS token is defined by four core parameters embedded in the query string:

.png)

The primary cause for the proliferation of overly permissive SAS tokens is rooted in operational friction. Development teams often prioritize speed and convenience, choosing to avoid the hassle in development due to least principle policy and opting to save time.

During proof-of-concept testing or rapid development phases, it is common practice for engineers to use full control permissions (sp=rwdlacup...) and set expiration dates years in the future. This prevents the disruption caused by tokens expiring during debug cycles. When these development configurations are migrated to production environments without proper security review or adjustment, the result is a critical, latent vulnerability.

When a compromised token grants broad scope (sr=c), covers multiple services (ss=bfqt), grants full control permissions (rwdlacup), and is active for years, it ceases to be a delegated temporary credential and becomes, in effect, an exposed secondary account key for the storage resource.

This risk is substantiated by major incidents. In June 2023, Microsoft AI researchers inadvertently exposed 38 TB of sensitive internal data. The exposure occurred because a researcher published a blob store URL containing an overly permissive SAS token in a public GitHub repository. This misconfiguration granted full access to the entire storage account, exposing proprietary tools, internal Teams messages, credentials, and backups of two employee workstations. This real-world incident confirms that such misconfigured tokens carry the highest level of security risk, often bypassing existing perimeter controls.

The prevalence of this issue stems from the ease of generating a powerful Account SAS versus the necessary complexity of configuring a precise and short-lived Service or User Delegation SAS. Security defenses must recognize this friction point and pivot toward prevention-by-default, employing mechanisms like Azure Policies to automatically reject or enforce maximum 7-day expiry limits on all non-UDSAS token creations.

When a high-value SAS token is leaked (e.g., found in public code repositories, logs, or exposed endpoints), the resulting compromise involves four distinct categories of business impact.

A compromised token with Read (r) and List (l) permissions grants the threat actor the ability to enumerate all containers and blobs within the authorized scope, effectively mapping the entire data structure.

This capability leads directly to large-scale data exfiltration, encompassing sensitive company files, proprietary intellectual property, application logs, and, critically, Personally Identifiable Information (PII) stored in database backups or user records. The Microsoft incident, where internal communications and workstation profiles were exposed, serves as a stark reminder of the breadth of sensitive data potentially residing in backup storage accounts.

If the token includes Write (w) and Update (u) permissions, an attacker can modify or replace existing files. This enables two major attack paths:

The inclusion of the Delete (d) permission grants the attacker the ability to wipe entire containers or storage accounts rapidly.

A malicious actor can execute a mass deletion script, immediately crippling application functionality reliant on those static assets. This targeted attack results in an effective Denial of Service (DoS) condition. Recovery requires immediate token revocation and extensive time and effort to restore critical data from backups, incurring severe operational downtime and reputational damage.

Permissions such as Create (c), Add (a), and Write (w) allow a threat actor to upload arbitrary content.

This vector is exploited by taking advantage of the victim organization's cloud subscription to store illicit data or simply to saturate the storage capacity and throughput limits. By uploading enormous volumes of large unnecessary files, the attacker can rapidly generate dramatic and unexpected spikes in the victim's billing costs, using the organization's cloud resources for their own purposes.

A Shared Access Signature is a secret, and like any leaked credential, its presence in publicly accessible locations poses an immediate threat. Given the typically long lifespan of misconfigured SAS tokens, security researchers and pentesters must treat every instance of an exposed token as a critical vulnerability. The primary vector for compromise is accidental publication in publicly indexable or client-facing application layers.

Key locations where overly permissive SAS tokens are commonly discovered include:

The single most common exposure point. Tokens are frequently checked into public source control platforms (e.g., GitHub, GitLab) within configuration files, debugging notes, or inline code during contributions to open-source projects. Searching historical commits is often necessary, as the token may have been removed from the latest version but remains valid if it was active when committed. For this purpose, tools like TruffleHog can be highly effective. It includes extensive regex patterns for detecting sensitive keys and secrets, and it also scans through commit history to uncover previously exposed tokens.

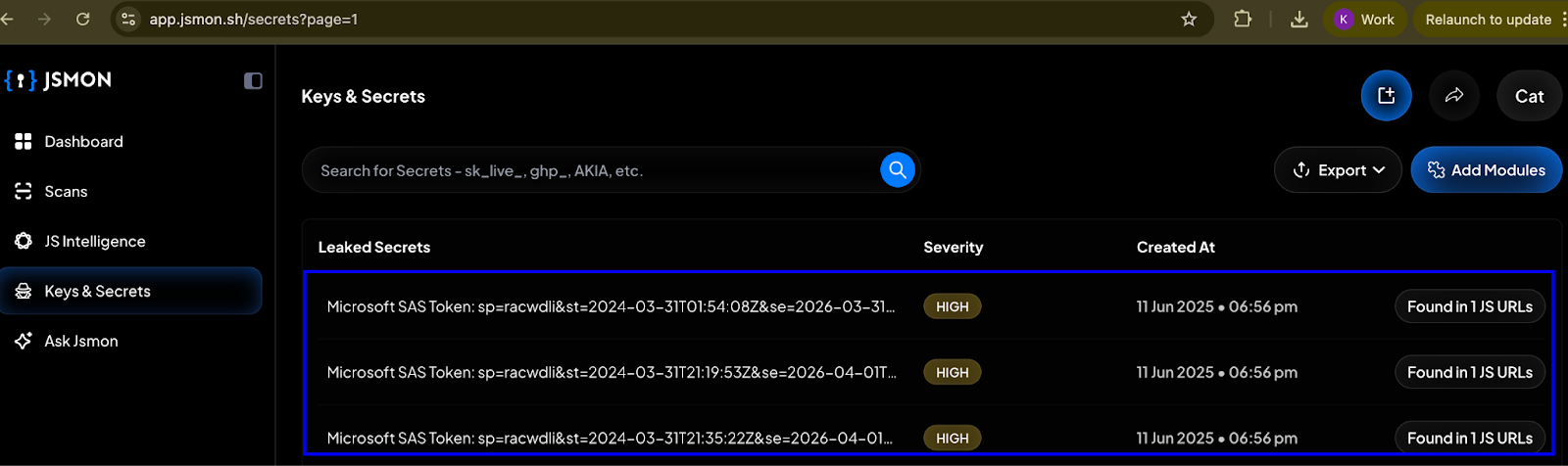

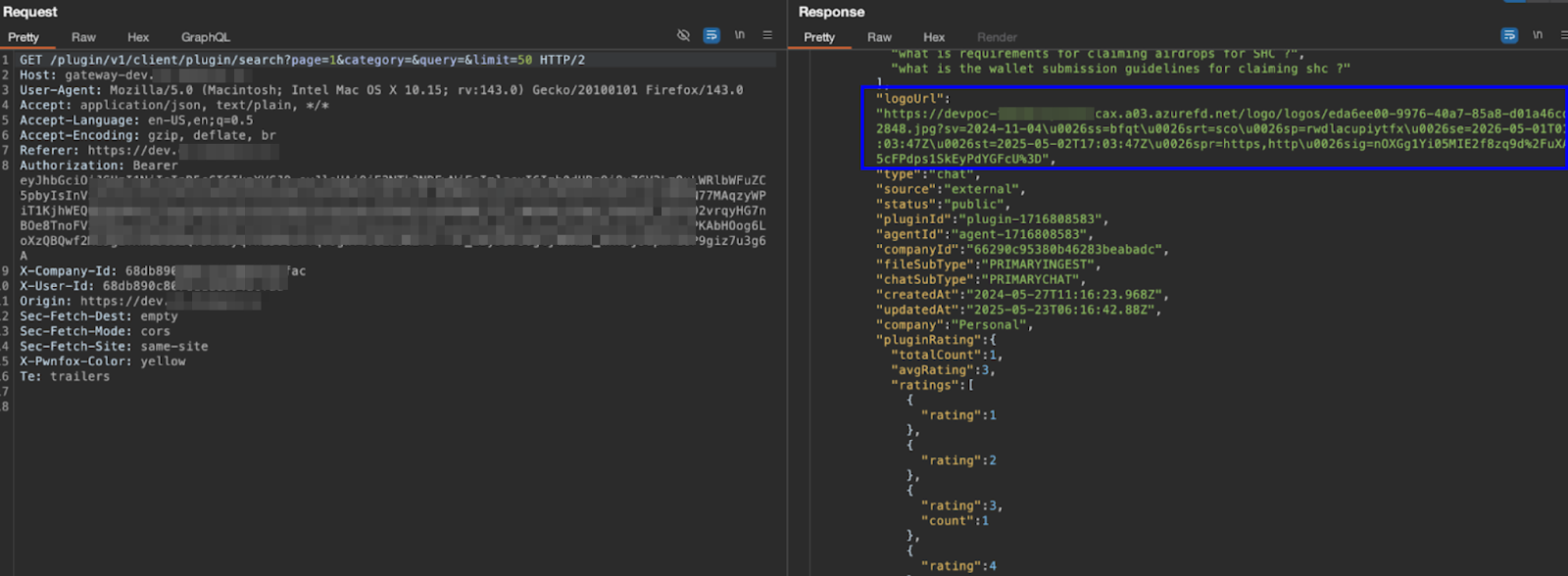

Application logic often embeds SAS tokens directly into frontend files (specifically JavaScript (JS) and CSS) to allow users' browsers to fetch media, fonts, or application-specific data directly from Azure Blob Storage. These tokens are easily visible via a browser's developer tools. To detect such exposures, you can use tools like Jsmon, which continuously monitors and scans all JavaScript assets of an organization for hardcoded keys, secrets, and other sensitive data leaks.

When a web application or mobile app requests a file or attachment, the API response often provides a full and pre-signed URL to the file, which includes the SAS token as a query string parameter.Intercepting or reviewing network traffic reveals these exposed URLs.

Tokens intended for limited sharing (e.g., with partners or for data science collaboration) are sometimes posted directly onto public documentation wikis, blog posts, or configuration guides. These pages may be indexed by search engines or archived.

Due to their multi-year expiry dates, old, valid tokens can often be found in web archive services like the WayBack Machine, or within outdated public-facing documentation pages that were never fully removed.

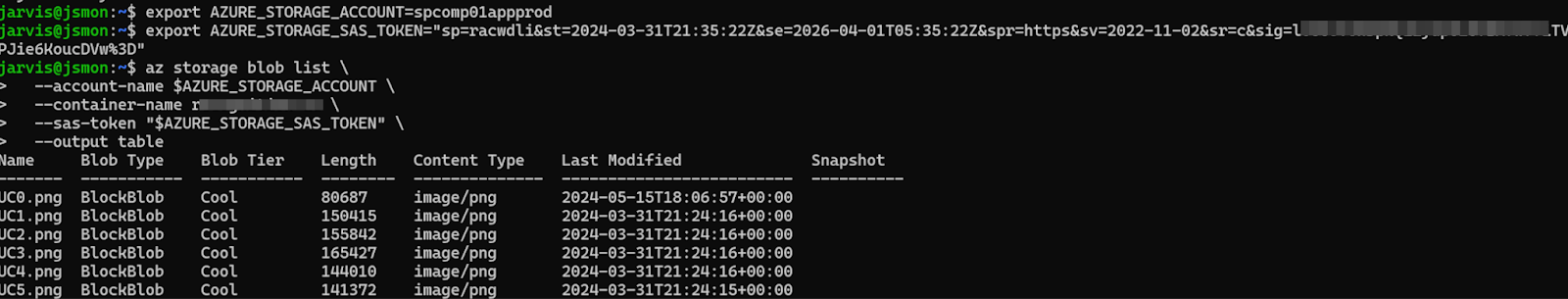

Once a potential SAS token is identified, a security researcher's priority is to determine the severity of the issue by validating the token's scope and permissions against the Confidentiality, Integrity, and Availability (CIA) triad. The Azure Command Line Interface az cli is the standard tool used to test these capabilities.

The process involves first identifying the target storage account and then systematically attempting actions that correspond to high-risk permissions. All these validation steps can also be performed using the Azure Storage Explorer graphical application, which utilizes the SAS token for authorization.

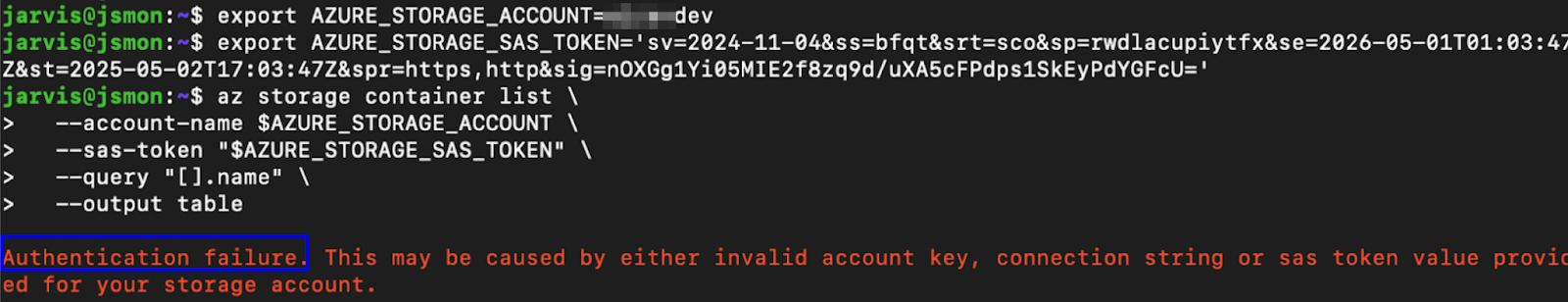

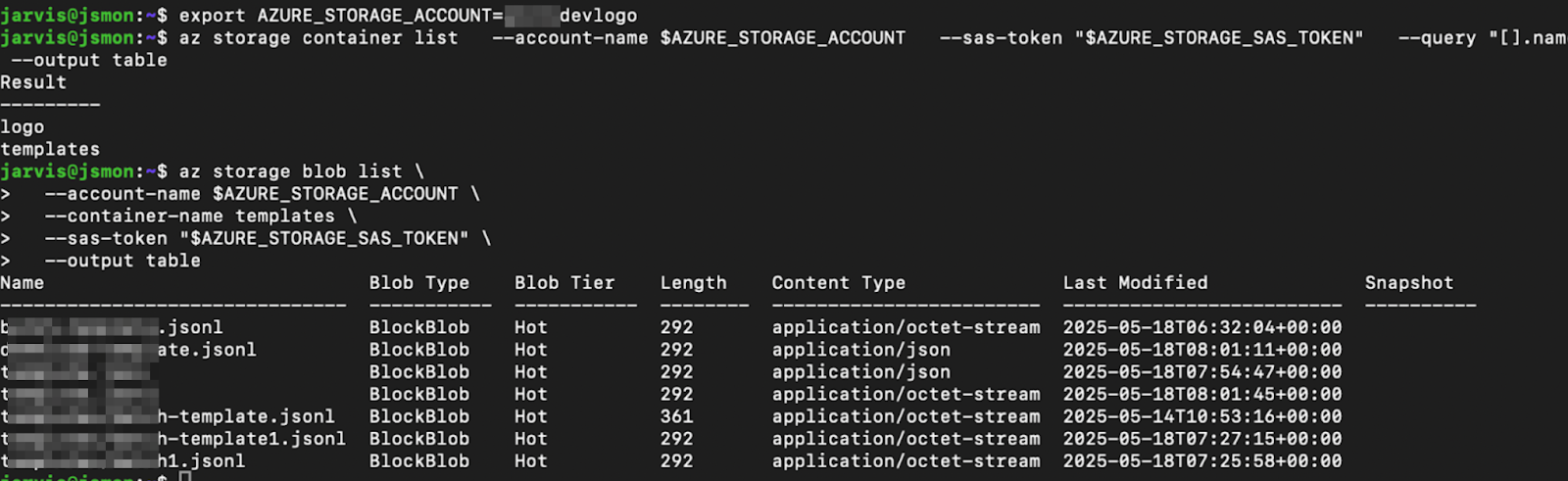

The storage account name is the first part of the subdomain in the SAS URL, followed by .blob.core.windows.net. Extract this information and set up shell variables for easy command execution.

If the SAS token grants access to the service or the entire account sr=s or sr=c, the ability to list containers is a primary indication of broad information disclosure and high confidentiality risk.

If this command succeeds, the attacker has a map of all sensitive containers (e.g., backups, user-pii, logs). If it fails, the token may still be valid but is limited to a specific container, which must be identified from the original SAS URL structure (...windows.net/<container-name>/).

Once the container name is known, testing the ability to list and read the contents of that container confirms the data exfiltration risk.

A successful output confirms the ability to enumerate files, which is a critical step before large-scale data exfiltration.

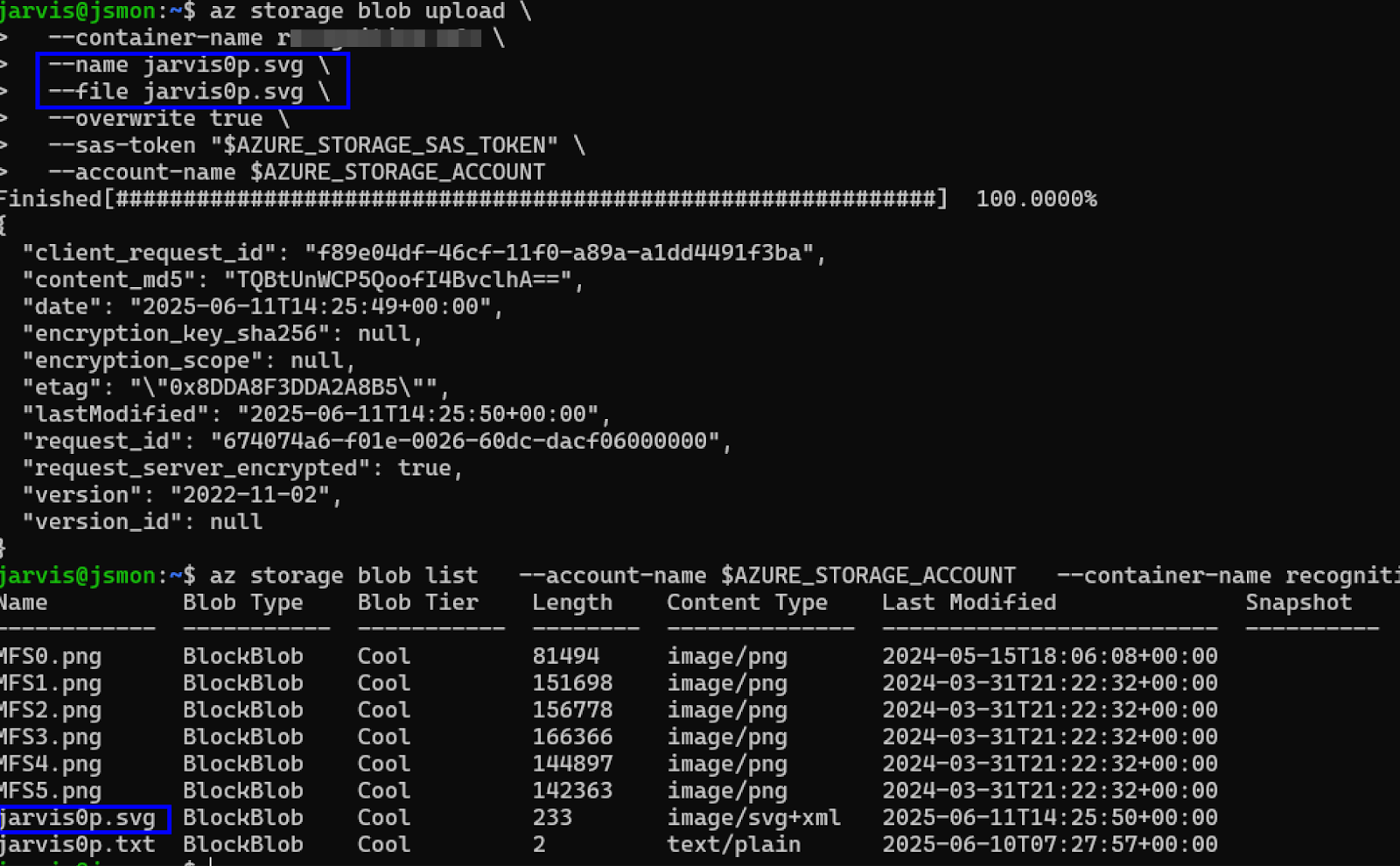

To test the Integrity of the data (the ability to inject malware/XSS payloads) and the risk of Availability attacks (DoS via deletion or cost abuse via mass upload), the researcher attempts to upload a test file.

First, create a simple test file named jarvis0p.svg.

Bash

A successful upload confirms a critical integrity vulnerability. If the token also includes Delete d permission, the threat actor has full control over the container, posing maximum risk to Confidentiality, Integrity, and Availability.

The following two case studies illustrate the progression of a SAS token compromise, from accidental exposure to full environment takeover, demonstrating both direct leakage and sophisticated architectural bypass.

A security analysis of a large public domain revealed that several Azure SAS tokens were openly embedded and exposed within publicly accessible front-end JavaScript files. This scenario represents a fundamental failure of secret management, similar to storing credentials directly in application code.

The compromised token analyzed exhibited extreme persistence and wide privilege:

Utilizing these broad permissions, I successfully uploaded a test file (named jarvis0p}.txt) into a critical application container, confirming the critical integrity risk afforded by the Write (w) and Create (c) permissions.

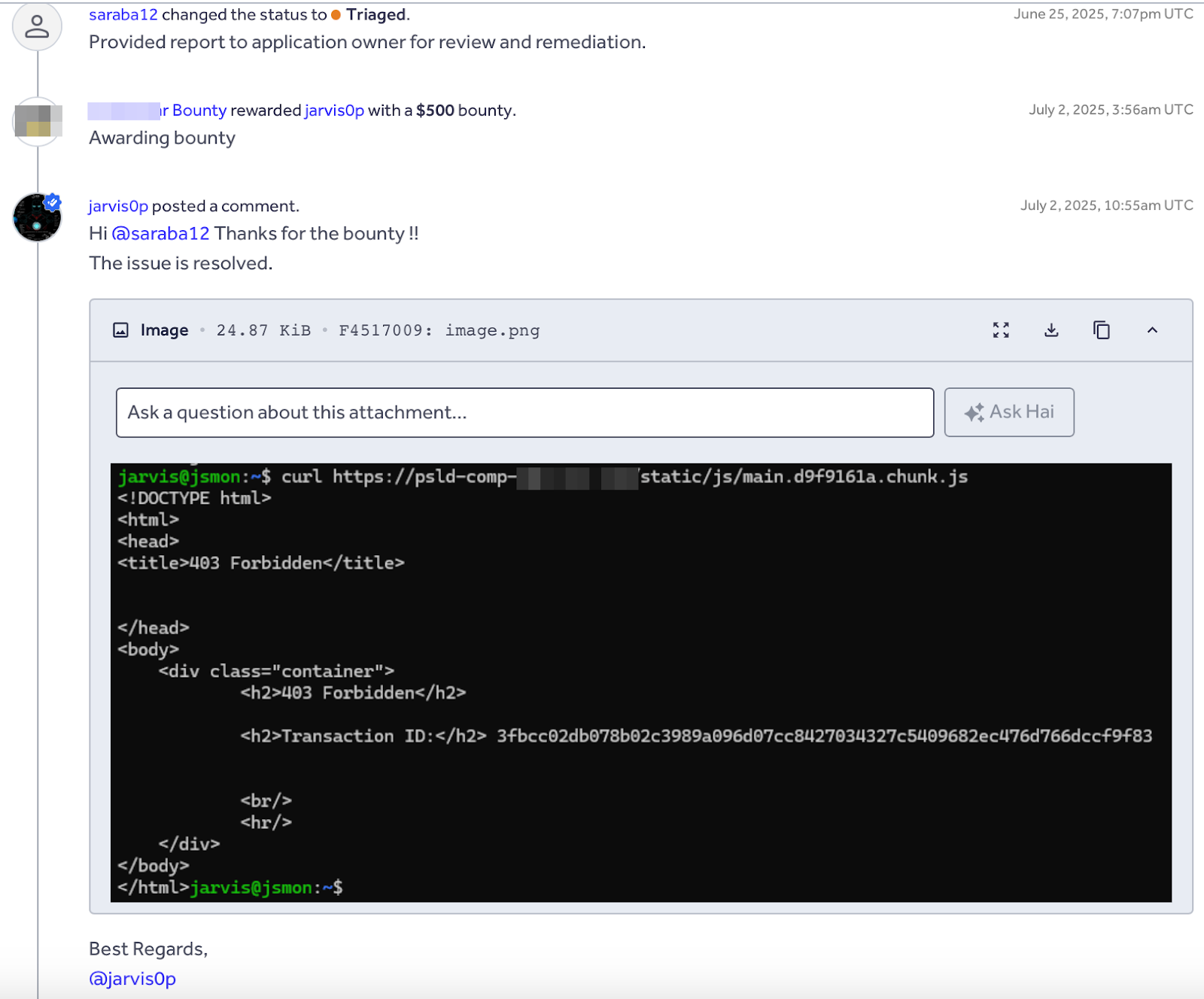

The internal security team promptly confirmed the exposure and took corrective action by disabling public internet access to the affected storage host, effectively revoking unauthorized access. The issue was acknowledged as valid, and a $500 bounty was awarded in recognition of the responsible disclosure.

This case demonstrates that relying on architectural obscurity without strict network enforcement is insufficient protection. The application used an Azure Front Door CDN (.a03.azurefd.net) as a layer of defense.

During initial recon I discovered an overly permissive SAS token exposed in front-end files. The token granted broad account-level access across containers with full operation permissions (read/write/create/delete/list + other operations). However, that Azure Storage account was sitting behind Azure Front Door, and without knowing the storage account hostname I could not directly target it.

Recognizing that the storage account name was the missing key, I began searching for the blob.core.windows.net pattern across all requests and responses. During this review, I identified an API endpoint exposing another storage account name, for instance, exampledev.blob.core.windows.net. However, attempts to exploit it using the same SAS token again returned 401 Unauthorized, suggesting that the application might be leveraging multiple Azure Blob Storage accounts for file management.

With limited information, I shifted focus toward understanding the developer’s naming conventions. After several educated guesses, I inferred that the container name (logo) could be embedded within the storage account name itself. Testing this hypothesis, I tried examplelogodev.blob.core.windows.net , and it worked. This discovery granted full access to the underlying Azure Blob Storage account, effectively achieving complete storage account takeover through the previously exposed SAS token.

Due to consistent naming conventions, the same enumeration and exploitation technique applied to both the development (exampledevlogo) and production (exampleprodlogo) storage accounts, leading to full R/W/D access across sensitive environments . This confirms that architectural controls, such as Front Door, act merely as a thin layer of obscurity if network access is not strictly restricted at the storage origin, making token integrity paramount.

Mitigating the threat posed by overly permissive SAS tokens requires an enterprise-wide shift toward preventative security controls across policy, architecture, and monitoring.

Organizations must strictly enforce the principle of least privilege in token generation.

First, prioritize the use of User Delegation SAS (UDSAS) whenever authentication via Microsoft Entra ID is feasible. The built-in, non-negotiable seven-day maximum expiry on UDSAS tokens provides a powerful, automated temporal control against long-lived access.

Second, tokens must grant the absolute minimum required permissions sp for the specific task. If an application only needs to read files for display, only the Read r permission should be granted. Furthermore, the resource scope sr should be strictly limited to the specific blob sr=b rather than the entire container sr=c if container-level listing is not required. The use of the powerful, multi-service Account SAS should be avoided entirely if a Service SAS or UDSAS can fulfill the requirement.

SAS tokens must be treated as volatile secrets. Hard-coding tokens directly into application code, as seen in Case Study A, creates an enduring security flaw.

Best practices dictate configuring SAS expiration policies to be as short as operationally possible. For any non-UDSAS token, a strict organizational policy should define a minimal time interval for expiration. Secure automated pipelines must be implemented to manage the token lifecycle, using services like Azure Key Vault to securely generate, inject, and periodically rotate tokens. This ensures that even if a token is briefly exposed, its utility to an attacker is severely limited by time.

Relying solely on token obscurity behind a CDN is an insufficient defense, as demonstrated by Case Study B.

Organizations should utilize a Content Delivery Network (CDN) or Azure Front Door as the primary entry point for public assets, preventing direct access to the storage origin. Crucially, the security defense must be completed at the origin: Network access controls on the backend storage account must be configured to disable all public access and restrict ingress traffic to only accept requests originating from the trusted Front Door or CDN endpoints. This prevents enumeration and direct exploitation even if a high-privilege SAS token is compromised. Furthermore, to limit the blast radius, separate storage accounts or containers should be maintained for public, low-sensitivity files, distinct from accounts containing high-sensitivity PII, logs, or backups.

Continuous monitoring and auditing are essential to detect and react to token exposure and anomalous usage.

Organizations must enable Azure Storage Analytics to ensure all access to storage resources is logged. Security teams should establish dedicated monitoring dashboards using tools like Azure Monitor and Log Analytics (KQL queries on StorageBlobLogs filtered for AuthenticationType contains "SAS" to track token usage patterns and immediately flag anomalous activity or exploitation attempts against an exposed token. Finally, robust preventive controls, such as Azure Policy, should be used to continuously enforce security standards, monitoring storage accounts for overly broad network access controls, the presence of anonymous access, or long-lived SAS policies. Proactive credential scanning tools must also be deployed to identify exposed SAS tokens inadvertently committed to public or internal code repositories.

Azure Shared Access Signatures are indispensable tools for building scalable cloud applications, but their inherent flexibility is also their greatest security liability. The evidence from multiple compromises indicates that the risk associated with these tokens is not a flaw in the Azure feature itself, but rather a systemic organizational failure in implementing governance, training, and policy.

An overly permissive SAS token with a long expiry date is a critical data breach accelerator, capable of transforming a localized application bug into a 38 TB corporate data exposure. To effectively neutralize this threat, security teams must treat SAS token lifecycle management as a core tenant of the Zero Trust architecture. By shifting focus from reactive detection to proactive prevention,prioritizing User Delegation SAS, enforcing tight temporal constraints, applying the principle of least privilege, and pairing tokens with strict network isolation,organizations can ensure that necessary access delegation does not inadvertently become an irreversible security compromise.

A SAS token is a signed Uniform Resource Identifier (URI) that grants temporary, delegated access to specific Azure Storage resources (like Blob Storage) without exposing high-value, long-term credentials such as the Storage Account Access Keys.

When a SAS token is configured with broad permissions (full control) and an extended expiry time (years into the future), it essentially functions as an exposed secondary account key. If this token is compromised, it acts as a persistent backdoor, granting unauthorized actors nearly unrestricted access to the entire storage account, leading to critical data breaches.

The four critical impacts are: Complete data confidentiality breach: Attackers can list and exfiltrate sensitive files, PII, logs, and backups. Integrity compromise and malware distribution: Attackers can modify or replace legitimate files, enabling malware distribution or Web Application Hijacking (XSS) by injecting malicious code into web assets. Availability risk via Denial of Service (DoS): The Delete permission allows attackers to wipe entire containers or storage accounts, crippling application functionality and causing severe downtime. Financial abuse and cloud cost inflation: Attackers can upload arbitrary, enormous volumes of illicit data, leveraging the victim's cloud subscription and causing unexpected spikes in billing costs.

The primary vector for compromise is accidental publication in publicly accessible locations, including: Public code repositories (like GitHub or GitLab) within configuration files or inline code. Client-side web assets, such as JavaScript (JS) or CSS files, visible via browser developer tools. API and file URLs returned in a web application or mobile app response. Public documentation, wikis, and configuration guides.

The recommended best practice is to adhere strictly to the Principle of Least Privilege: Prioritize User Delegation SAS (UDSAS), which has a non-negotiable maximum expiry of seven days and is secured via Microsoft Entra ID. Grant the absolute minimum required permissions (e.g., only 'r' for read) and limit the resource scope to a specific blob (sr=b) instead of an entire container (sr=c). Avoid using the powerful Account SAS if a Service SAS or UDSAS can meet the requirement.

Zero Trust architecture mandates that organizations move from reactive detection to proactive prevention. This involves: Enforcing least privilege in token creation. Implementing short time limits and automated rotation policies for SAS tokens. Enforcing architectural isolation and strict network controls to disable all public access and restrict traffic to trusted endpoints (like CDN or Front Door). Continuous monitoring and auditing of token usage via tools like Azure Storage Analytics and Log Analytics.

Security teams and researchers typically use the Azure Command Line Interface (az cli) to systematically attempt high-risk actions. This process includes: Attempting to list all containers to test the 'l' (List) permission for confidentiality risk. Attempting to list blobs within a container to confirm data exfiltration risk. Attempting to upload a file to test the 'w' (Write), 'c' (Create), and 'u' (Update) permissions for integrity and availability risks.